4 Ways to Connect Tableau to MongoDB

MongoDB is one of the most popular of a new breed of “NoSQL” databases. NoSQL databases are essentially the opposite of relational databases such as Oracle, SQL Server, MySQL, PostgreSQL, etc. They allow for the storage of unstructured and semi-structured data as well as the ability to maintain flexible schemas.

Here are a few key things to know about MongoDB:

- Focuses on the storage of “documents” (as opposed to graph databases or other types of NoSQL databases).

- Data is stored in JSON format (technically, they store data in a binary representation of JSON they call BSON).

- Built with developers in mind, so it has lots of tools, APIs, and drivers to meet the needs of virtually any developer.

- Because it does not require the creation of rigidly-defined schemas, it provides developers with lots of flexibility. The focus can be shifted from “schema-on-write” to “schema-on-read.” This creates much more agility for developers.

- Built with a distributed architecture so it is highly available, scalable, durable, and reliable out-of-the-box.

- It is open source, though MongoDB also sells licensed enterprise versions (more on that later).

Because of the above, MongoDB is a fantastic generalized database for any type of data from unstructured and structured data (and everything in between). It makes a great platform for small to medium data lakes. And it is one of the top choices of database for many modern developers.

Connecting Tableau to MongoDB

With the continued growth and popularity of the MongoDB platform, we as analytics professionals, will likely cross paths with it at some point and will need to connect Tableau (or other BI tools) to it. The important thing to remember here is that Tableau expects data to come in a relational format—tables with columns and rows that are related to other similarly structured tables. But MongoDB is not relational, so that immediately creates some challenges. Some restructuring of the data will inevitably be necessary for it to be consumable in Tableau. Thus, connecting to MongoDB is a bit trickier than connecting to more commonly used relational databases.

In this blog, I’m going to provide you with 4 options for connecting Tableau to your MongoDB data. For my examples, I’ll be using an instance of MongoDB Atlas, Mongo’s cloud database-as-a-service offering. You can connect to it using the following connection details:

Cluster Name: Mongo-shard-0/mongo-shard-00-00-pw3el.mongodb.net:27017,mongo-shard-00-01-pw3el.mongodb.net:27017,mongo-shard-00-02-pw3el.mongodb.net:27017

Username: nosql

Password: nosql

The cluster has a number of sample databases installed already, one of which is similar to Tableau’s Superstore, called sample_supplies; it contains a single collection called sales, which I’ll be using in the examples below.

Note: What we call Tables in relational databases are called Collections in MongoDB. Rows or Recordsare known as Documents.

Option 1: Export Data as JSON

The first option is to export data out of MongoDB into JSON files, then leverage’s Tableau’s native JSON connector. To do this, we’ll need to do the following:

1) On the computer running MongoDB, open a command line. If you cannot connect to the computer, as in the case with our Atlas instance, you’ll need to install the MongoDB utilities on your computer. To do this, download MongoDB and choose the custom install option. Deselect all options except for the Import/Export tools.

2) Open a command line and navigate to the directory in which the utilities were installed (on Windows, it should be something like this: C:\Program Files\MongoDB\Server\4.0\bin).

3) Run mongoexport using the following syntax (red text indicates that we need to plug in our own values here):

mongoexport --host <Cluster or Host Name> --ssl --username <Username> --password <Password> --authenticationDatabase admin --db <Database Name> --collection <Collection Name> --type json --out <Output JSON File Name>

To export the sales collection from the sample_suppliesdatabase in our Atlas cluster, use the following command:

mongoexport --host Mongo-shard-0/mongo-shard-00-00-pw3el.mongodb.net:27017,mongo-shard-00-01-pw3el.mongodb.net:27017,mongo-shard-00-02-pw3el.mongodb.net:27017 --ssl --username nosql --password nosql --authenticationDatabase admin --db sample_supplies --collection sales --type json --out c:\sales.json

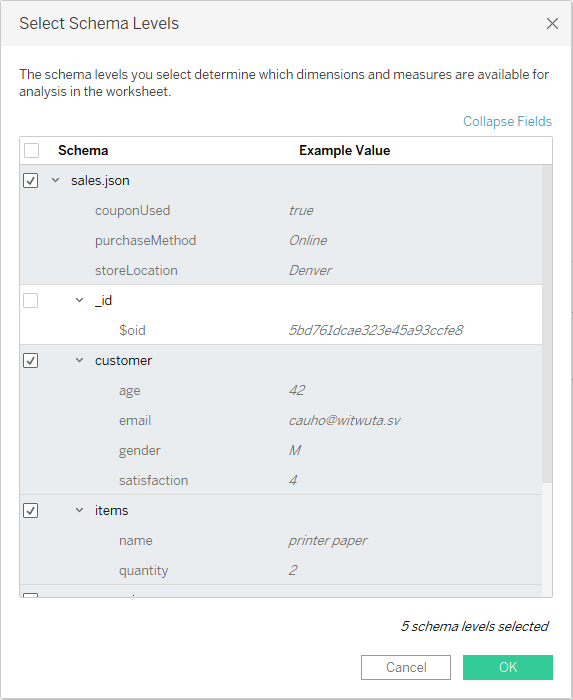

4) Use Tableau’s JSON file connector and select the json file. Since the JSON file is not structured in a relational format, Tableau will prompt you to select which “schemas” you wish to include.

Once you select the schemas, Tableau will flatten the data, repeating fields in multiple rows, as shown below.

This is somewhat similar to if you were to join a Salestable to a Customer table. That customer’s data would then be repeated for each row.

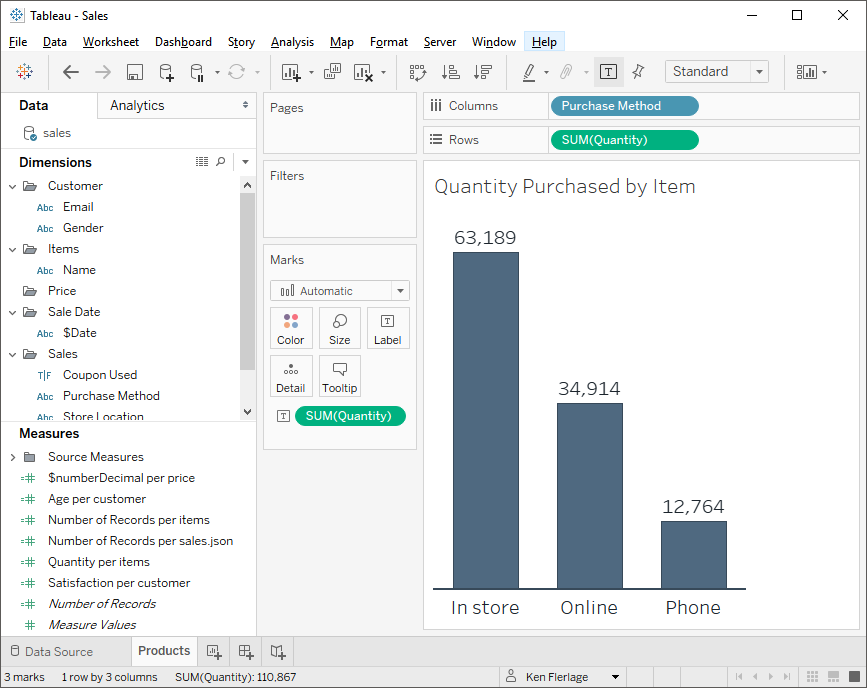

5) Create your visualization.

Note: I’ve used the recommendations in Ryan Sleeper’s fabulous blog, 3 Ways to Make Beautiful Bar Charts in Tableau to create this bar chart. I’d highly recommend his suggestions to give your bar charts some extra appeal.

There are, of course, some problems with this method. First of all, it’s very manual—it requires you to export each collection into JSON files every time you want to update your visualization. This could, of course, be addressed by creating some automated process that exports the collections to JSON files on a regular basis, but that could prove to be a lot of work. Even with automation, however, performance could be a problem—instead of leveraging the horsepower built into the database, you are now reliant on a file sitting on a hard drive, which will not perform as well as a direct connection to the database. Finally, the JSON file connector is pretty limited. You cannot join multiple JSON files to each other and can’t even join to other types of files or databases, so you may struggle to piece together a data model that has the flexibility you need.

Option 2: Third Party ODBC

Multiple third-party providers have created ODBC drivers for MongoDB, including Simba, Progress, DevArt, CData, EasySoft, and others. Here are the steps for leveraging a third-party ODBC driver (I’ll be using the Simba driver but most are pretty similar):

1) Download and install the driver. In general, the installs for these drivers tend to be very straightforward.

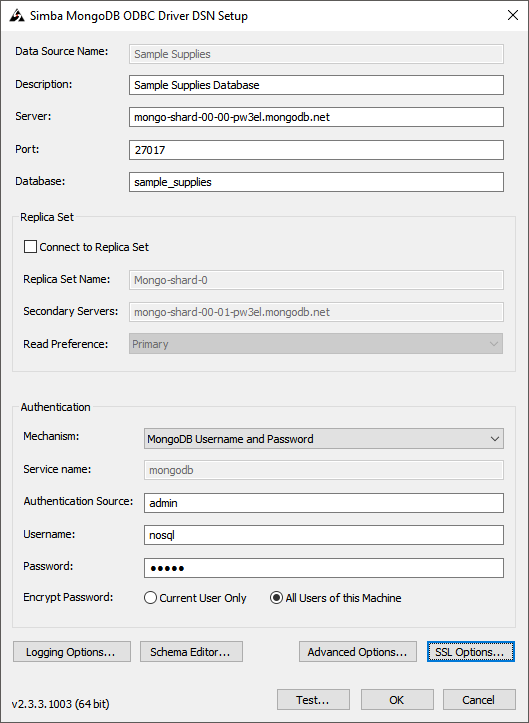

2) Set up your ODBC data source. Here’s how I set up my connection to the MongoDB Atlas cluster using the Simba driver:

Note: I also opened the SSL settings and checked the “Enable SSL” box.

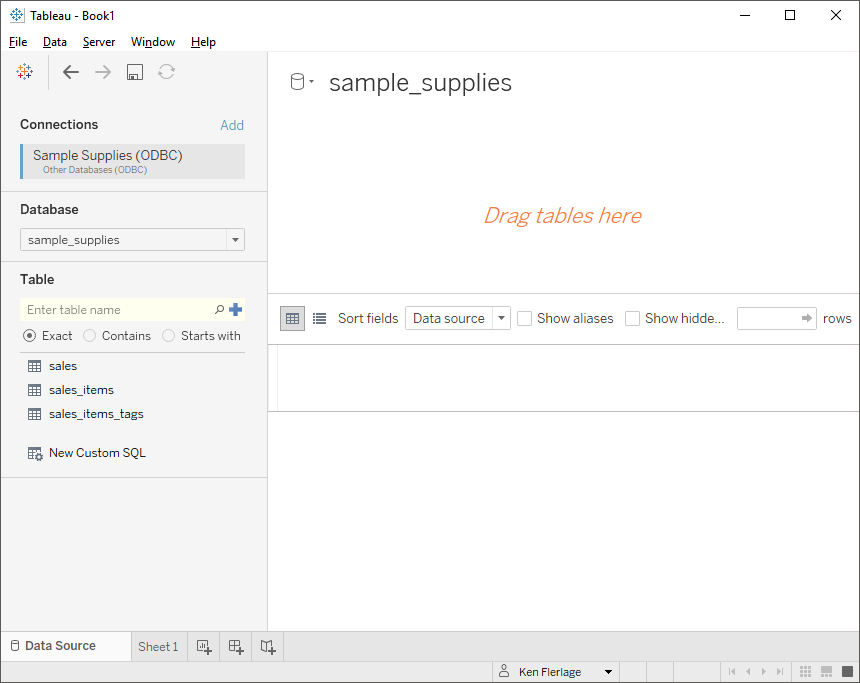

3) Use Tableau’s ODBC connector to connect to the new ODBC data source.

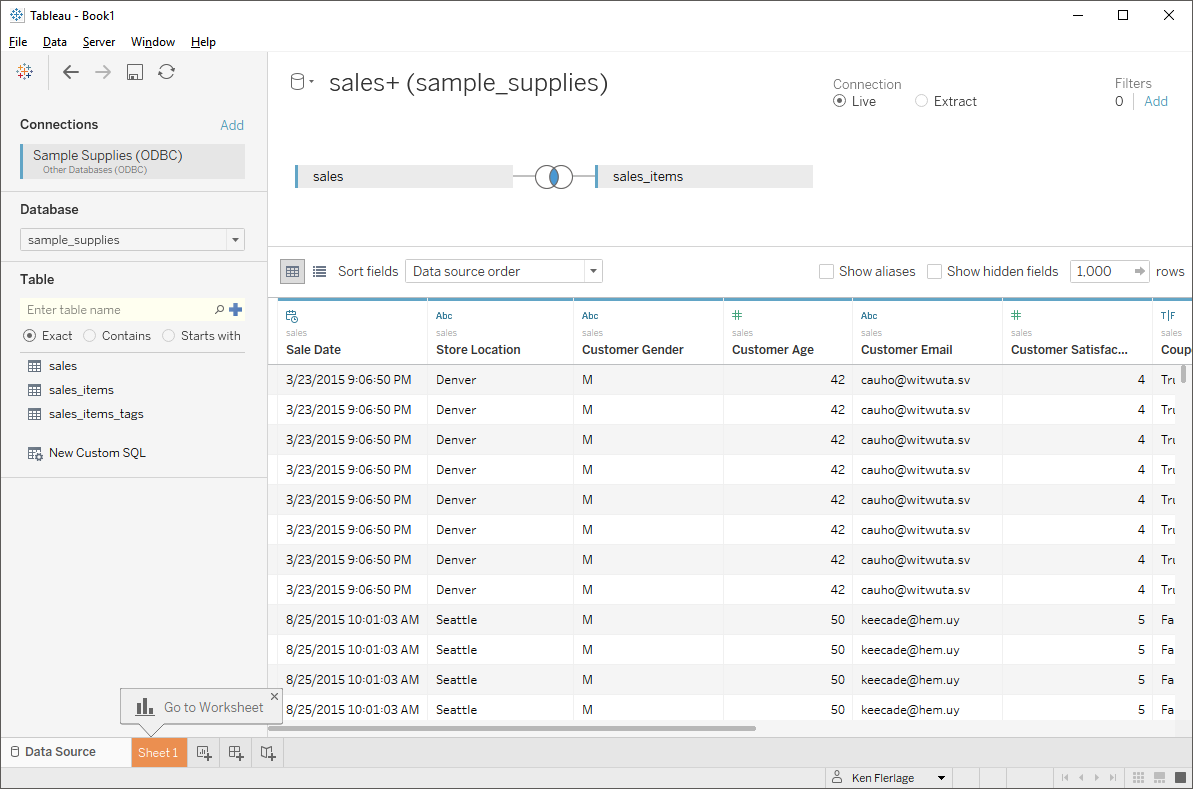

Notice that the driver automatically breaks the collection into multiple tables. This is because the driver must translate the JSON data into a relational format. This requires normalization of the collection into multiple tables. By default, the drivers will automatically perform this normalization, but some of them, including Simba, provide you with additional tools which allow you to modify the automatically-generated schemas.

4) Create your data model.

5) Create your visualization.

This method also has some drawbacks. For one, the third-party driver is just another tool you have to maintain. In addition, these drivers are not free, so there will be some cost involved. I’ve also found that these drivers can have mixed results, in terms of both the table normalization process and performance, especially when dealing with more complex JSON structures.

Option 3: The BI Connector

MongoDB offers a “Connector for Business Intelligence” which essentially flattens your MongoDB data, in real-time, so you can connect Tableau (and other tools) directly to the database. This connector is a separate install, which can be set up on one of the MongoDB servers or another server (it does not have to be installed on each user’s client or the Tableau Server). In the case of Atlas, it is built into the platform.

The connector leverages the MySQL wire protocol to translate MongoDB’s JSON structure into a flattened relational structure. Yes—I said “MySQL”—the end result of the connector is a sort of virtual MySQL database, which you can interact with just like any other MySQL database. But, because MongoDB collections can contain unstructured or semi-structured, a single collection may result in multiple tables, just like the third-party ODBC drivers.

Note: Tableau has a native connector for the BI Connector, which leverages the MySQL driver. As of the writing of this blog, the BI Connector only supports version 5.1.x of the MySQL driver. If you have the version 8 driver installed, you will likely run into problems.

The BI Connector, in my opinion, the best way to consume your MongoDB data in Tableau as it is an automated, real-time solution that does not require any manual intervention or use of third-party products. But here’s the rub—for Production workloads, the BI connector is only available via MongoDB’s enterprise offerings, Enterprise Advanced and Atlas Enterprise Advanced. That said, if you have the budget available, then I highly recommend this option.

A quick note on custom SQL. You can use custom SQL with the BI Connector, but it does not support everything you may be accustomed to. So, if you need to write custom SQL, do your best to keep your SQL as simple as possible. Here's a reference of Supported SQL Functions and Operators.

A quick note on custom SQL. You can use custom SQL with the BI Connector, but it does not support everything you may be accustomed to. So, if you need to write custom SQL, do your best to keep your SQL as simple as possible. Here's a reference of Supported SQL Functions and Operators.

Option 4: Create a Data Pipeline

A final option is to create a data pipeline or ETL workflow that moves data out of MongoDB and into some more structured database, such as a data warehouse. There are lots of options available for programmatically extracting data so you can then restructure and write it to another database platform. Or, if you do not want to write code, you can leverage either third-party ODBC or the BI Connector within an ETL workflow.

You might be asking why, if we’re moving data out of it for analysis, we’re even storing data in MongoDB in the first place. But remember, we’re only part of the puzzle here. As noted previously, MongoDB is great for application developers. For example, one of the huge advantages for them is that they can write data to the database in a loosely structured manner without spending too much time focused on pre-defining a structured schema. This can be a huge timesaver! So, even though it may be a bit more difficult for us to get data out, it has many benefits that can often outweigh these drawbacks.

Wrap-Up

I love MongoDB. It’s a fantastic NoSQL platform that provides a ton of flexibility for developers and lots of great features for the rest of us. I’m hopeful that the tips I’ve provided here help you to better understand how the platform works and allows you a few different options for consuming its data within Tableau. Thanks for reading!!

Ken Flerlage, July 21, 2019

We've tried to recreate the set up with our own MongoLabs instance. However when we try to connect to it using the Simba ODBC driver, it returns the error "namespace not found (Error Code: 8000)"

ReplyDeleteOn the other hand, we can connect to your MongoLab instance just fine!

We looked up the error and it indicates that we're trying to perform an action when there's no collection. We checked out database and confirmed that we do have a collection.

We ran mongoexport on our database and collection and it worked. As a result, we're at a bit of a loss.

What step(s) are we missing?

Thanks.

Are you talking about an mLab instance (https://mlab.com/) or is this just a locally installed instance of MongoDB?

DeleteIt was an mLab instance. In our case, spinning down the instance and spinning up a new one resolved the issue.

DeleteVery strange indeed...

Interesting. Glad you got it working though!!

DeleteWe're starting to jump into the NoSQL databases so I'm reading up - this was very helpful. One thing I'm sensing is that Tableau wasn't originally built with this non relational database structure in mind (it didn't really exist when the product was first created).

ReplyDeleteHow do you think the new relationship model in the Tableau 2020.2 release will incorporate and work with these types of structures?

This is a good question. Most BI platforms are still trying to figure out how to handle this non-structured data. And, of course, MongoDB has picked up on that, creating the BI connector to help bridge that gap. They have also created their own charting product that is able to consume NoSQL data natively. I don't know that the relationship model will have any impact on how we work with unstructured and semi-structured data as that is really just more intelligent mechanism for forming SQL queries (I have a blog on the new data model if you're interested). The problem, in my mind, is that, BI really does require structure. In order to understand data and find insights, some kind of structure is critical. While it would be nice to connect directly to NoSQL databases without the need for some middle step, I think that middle step is still really important.

DeleteThis was a great post, thanks for the read! I've found it's hard to find much good detailed information online about how to create your own data pipeline with mongoDB for the purposes of BI software like Tableau. Do you know of any good resources for learning more about how to do this?

ReplyDeleteI don't have much good advice for this, to be totally honest. There are some tools built into Mongo for this, but I haven't personally used them. My personally approach entails using an ETL tool and the BI connector. I use the BI connector to bring the data into my data warehouse and "relationalize" it, then I perform various transformations on that data, which can then be easily consumed by Tableau. Of course, Mongo is really built with developers in mind so you can always write code to act as your data pipeline. If you're a Python developer, you might want to check out Apache Airflow. Here's a blog on how to move MongoDB data to Redshift using Airflow which might help to get you started: https://www.astronomer.io/guides/mongo-to-redshift/

DeleteError Message :

ReplyDelete“An error occurred while communicating with MongoDB BI Connector

There was a problem while running the connector plugin. Reinstall the plugin or contact the plugin provider.

Note that you might need to make local configuration changes to resolve the error.

Error Code: 37CE01A3

Running the plugin script component exceeded the timeout: 3000ms

Unable to connect to the MongoDB BI Connector server "10.26.129.196". Check that the server is running and that you have access privileges to the requested database.”

Why we are getting above error for MongoDb connection with tableau by using MongoDb BI connector.

I'm not really sure to be honest. Have you been able to connect to the connector using any other tools? Could you try MySQL Workbench?

Delete